Hello Multi-Core CPUs (Finally)

Battling the laws of physics and Moore’s “law”, chip manufacturers like Intel and AMD have over the past decade shifted their focus from speeding up CPU clocks to increasing CPU core density. This transition has been painful for storage industry incumbents, whose software stacks had been developed in the 1990s to be “single threaded”, and therefore have needed years of painstaking effort to adapt to multi-core CPUs. Architectures developed after about 2005 have typically been multithreaded from the beginning, to take advantage of growing core densities.

One of the more interesting aspects of recent vendor announcements is just how long it has taken storage industry behemoths to upgrade their products to accommodate multi-core CPUs – judging by all the hoopla these are big engineering feats. Even a fully multi-threaded architecture like Nimble Storage’s CASL (Cache Accelerated Sequential Layout) needs software optimizing whenever there is a big jump in CPU core density to take full advantage of the added horsepower. The difference is that for Nimble these are maintenance releases (not announcement-worthy major or minor releases). We routinely deliver new hardware with the needed resiliency levels, and then add a series of software optimizations over subsequent maintenance releases to squeeze out significant performance gains.

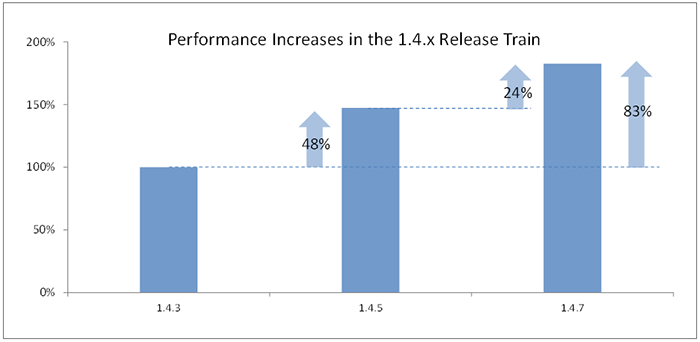

Here’s an example of what’s been accomplished in the 1.4.x release train over the last several months. Nimble customers have particularly appreciated that these performance improvements were made available as non-disruptive firmware upgrades (no downtime), and did not require any additional disk shelves or SSDs (more on how we do this below).

Beyond Multi-Threading

Multi-core readiness is nice, but there is an even more fundamental distinction between architectures. Using CPU cores more efficiently in a “spindle bound” architecture still leaves it a spindle bound architecture. In other words, if your performance was directly proportional to the number of high RPM disks (or expensive SSDs), improved CPU utilization and more CPU cores may raise the limits of the system, but will still leave you needing the exact same number of high RPM disks or SSDs to achieve the same performance. So meeting high random IO performance requirements still takes ungodly amounts of racks full of disk shelves, even with a smattering of SSDs.

The Nimble Storage architecture actually does something very different – it takes advantage of the plentiful CPU cores (and flash) to transform storage performance (IOPS) from a “spindle count” problem to a CPU core problem, allowing us to deliver extremely high performance levels with very few low RPM disks and commodity flash, and achieving big gains in price/performance and density to boot (in many cases needing 1/10th the hardware of competing architectures).

Similarly, the availability of more CPU cores does little to fix other fundamental limitations in some older architectures, such as compression algorithms that are constrained by disk IO performance, or heavy-duty snapshots that carry big performance (and in some cases capacity) penalties.

Thinking Outside the (Commodity) Box

It’s always good to raise the performance limits of the storage controllers, because this allows you to deliver more performance from a single storage node (improving manageability for large scale IT environments). Here again though, many (though not all) of the older architectures have a fundamental limitation – they can only use a limited number of CPU cores in a system if they want to leverage commodity hardware designs (e.g. standard Intel motherboards). A solution to this problem has been known for a long time – a horizontal clustering (scale-out) architecture. This approach allows a single workload to span the CPU resources of multiple commodity hardware enclosures, while maintaining the simplicity of managing a single storage entity or system. In the long run, this is the capability that allows an architecture to scale performance cost-efficiently without expensive, custom-built refrigerator-like hardware. This can also allow multiple generations of hardware to co-exist in one pool, with seamless load balancing and non-disruptive data migration, thus eliminating the horrendous “forklift” upgrades one of these recent vendor announcements promises to put their customers through.

So, while congratulations are in order to the engineering teams at our industry peers who worked so hard on making aging architectures multi-core ready, this is just a small step forward while the industry has moved ahead by leaps and bounds.